Think about coaching a mannequin on uncooked knowledge with out cleansing, reworking, or optimizing it.

The outcomes?

Poor predictions, wasted assets, and suboptimal efficiency. Characteristic engineering is the artwork of extracting essentially the most related insights from knowledge, guaranteeing that machine studying fashions work effectively.

Whether or not you’re coping with structured knowledge, textual content, or photographs, mastering function engineering is usually a game-changer. This information covers the best strategies and finest practices that can assist you construct high-performance fashions.

What’s Characteristic Engineering?

Characteristic engineering is the artwork of changing uncooked knowledge into helpful enter variables (options) that enhance the efficiency of machine studying fashions. It helps in selecting essentially the most helpful options to boost a mannequin’s capability to study patterns & make good predictions.

Characteristic engineering encompasses strategies like function scaling, encoding categorical variables, function choice, and constructing interplay phrases.

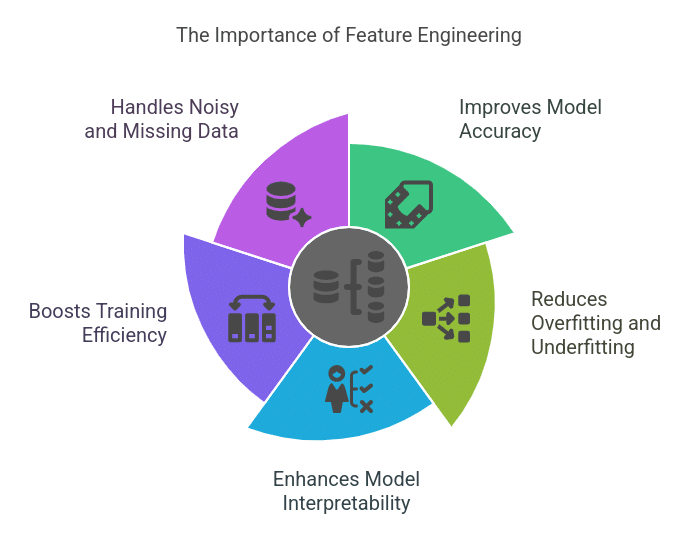

Why is Characteristic Engineering Essential for Predictive Modeling?

Characteristic engineering is without doubt one of the most crucial steps in machine studying. Even essentially the most superior algorithms can fail if they’re educated on poorly designed options. Right here’s why it issues:

1. Improves Mannequin Accuracy

A well-engineered function set permits a mannequin to seize patterns extra successfully, resulting in larger accuracy. For instance, changing a date column into “day of the week” or “vacation vs. non-holiday” can enhance gross sales forecasting fashions.

2. Reduces Overfitting and Underfitting

By eradicating irrelevant or extremely correlated options, function engineering prevents the mannequin from memorizing noise (overfitting) and ensures it generalizes nicely on unseen knowledge.

3. Enhances Mannequin Interpretability

Options that align with area data make the mannequin’s choices extra explainable. As an illustration, in fraud detection, a function like “variety of transactions per hour” is extra informative than uncooked timestamps.

4. Boosts Coaching Effectivity

Decreasing the variety of pointless options decreases computational complexity, making coaching sooner and extra environment friendly.

5. Handles Noisy and Lacking Knowledge

Uncooked knowledge is usually incomplete or comprises outliers. Characteristic engineering helps clear and construction this knowledge, guaranteeing higher studying outcomes.

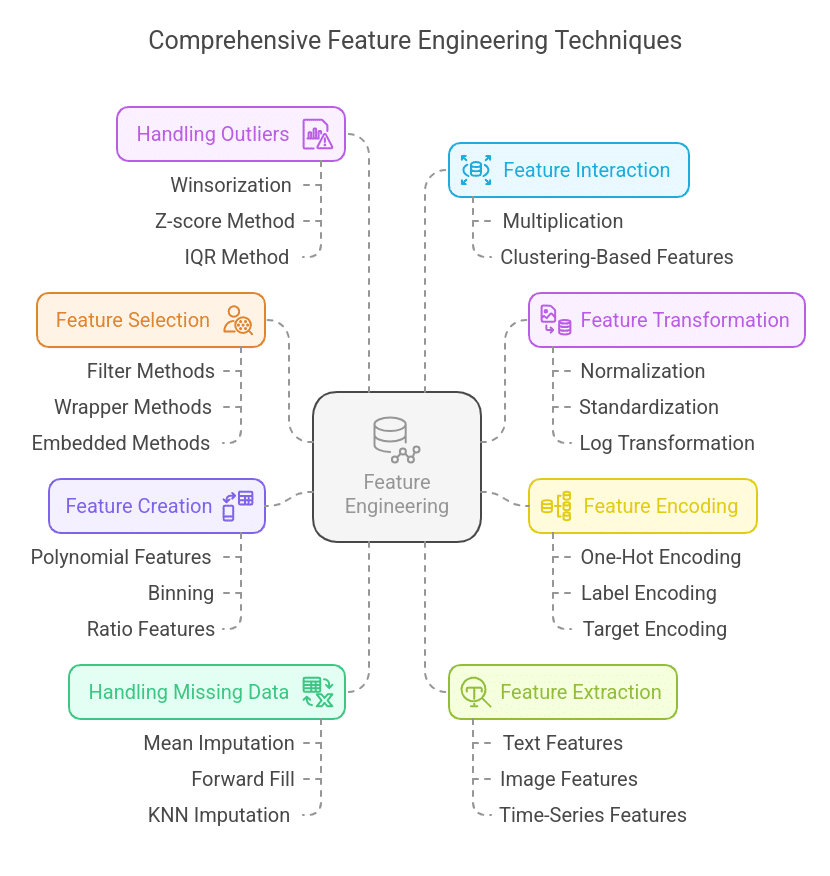

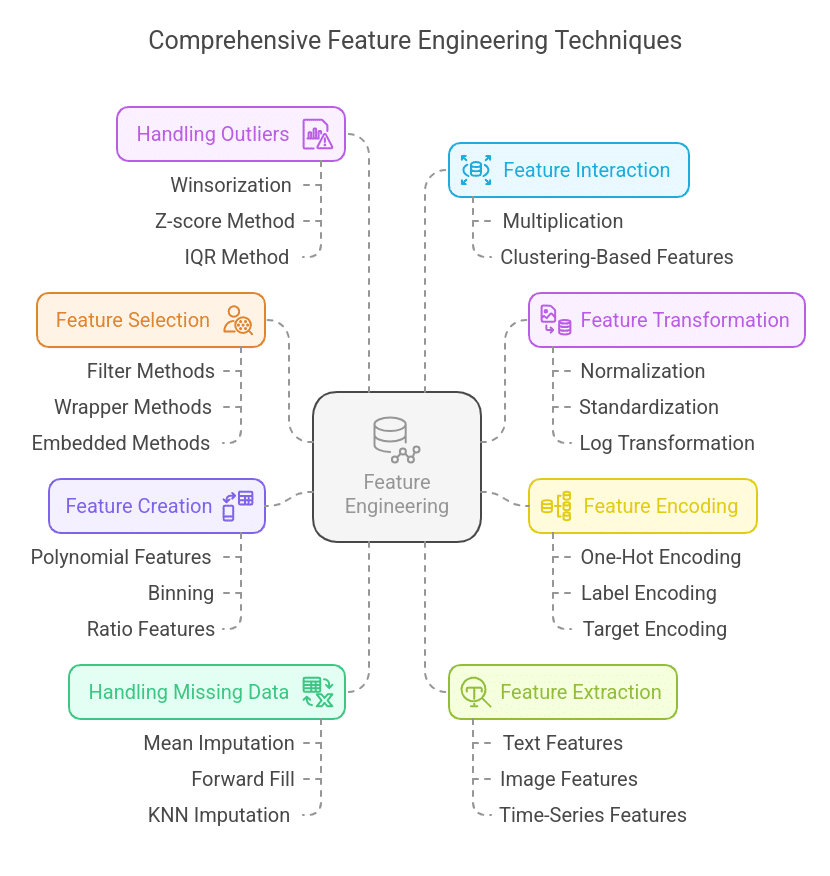

Key Strategies of Characteristic Engineering

1. Characteristic Choice

Choosing essentially the most related options whereas eliminating redundant, irrelevant, or extremely correlated variables helps enhance mannequin effectivity and accuracy.

Strategies:

- Filter Strategies: Makes use of statistical strategies like correlation, variance threshold, or mutual data to pick vital options.

- Wrapper Strategies: Makes use of iterative strategies like Recursive Characteristic Elimination (RFE) and stepwise choice.

- Embedded Strategies: Characteristic choice is constructed into the algorithm, akin to Lasso Regression (L1 regularization) or determination tree-based fashions.

Instance: Eradicating extremely correlated options like “Whole Gross sales” and “Common Month-to-month Gross sales” if one might be derived from the opposite.

2. Characteristic Transformation

Transforms uncooked knowledge to enhance mannequin studying by making it extra interpretable or decreasing skewness.

Strategies:

- Normalization (Min-Max Scaling): Rescales values between 0 and 1. Helpful for distance-based fashions like k-NN.

- Standardization (Z-score Scaling): Transforms knowledge to have a imply of 0 and commonplace deviation of 1. Works nicely for gradient-based fashions like logistic regression.

- Log Transformation: Converts skewed knowledge into a standard distribution.

- Energy Transformation (Field-Cox, Yeo-Johnson): Used to stabilize variance and make knowledge extra normal-like.

Instance: Scaling buyer revenue earlier than utilizing it in a mannequin to forestall high-value dominance.

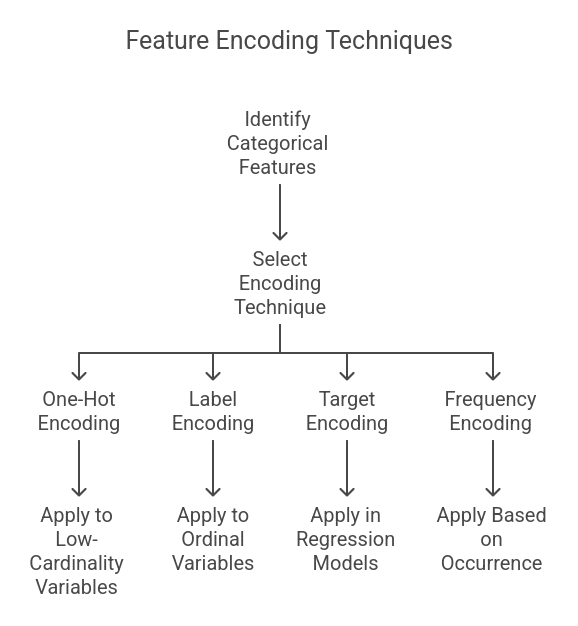

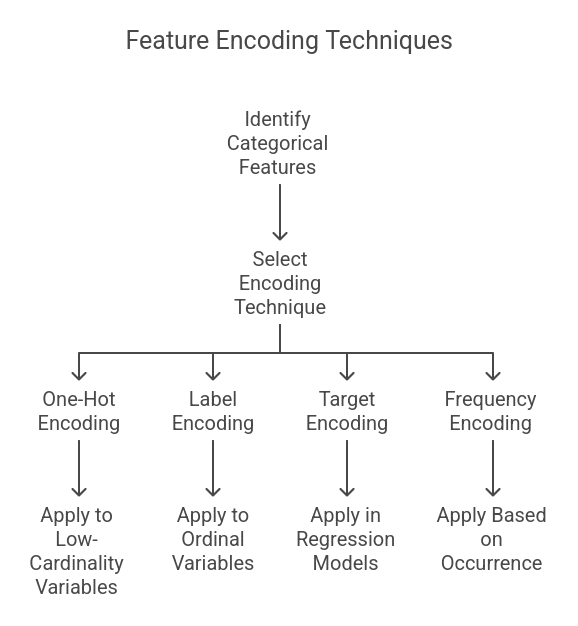

3. Characteristic Encoding

Categorical options should be transformed into numerical values for machine studying fashions to course of.

Strategies:

- One-Sizzling Encoding (OHE): Creates binary columns for every class (appropriate for low-cardinality categorical variables).

- Label Encoding: Assigns numerical values to classes (helpful for ordinal classes like “low,” “medium,” “excessive”).

- Goal Encoding: Replaces classes with the imply goal worth (generally utilized in regression fashions).

- Frequency Encoding: Converts classes into their incidence frequency within the dataset.

Instance: Changing “Metropolis” into a number of binary columns utilizing one-hot encoding:

| Metropolis | New York | San Francisco | Chicago |

| NY | 1 | 0 | 0 |

| SF | 0 | 1 | 0 |

4. Characteristic Creation (Derived Options)

Characteristic creation includes establishing new options from current ones to supply further insights and enhance mannequin efficiency. Properly-crafted options can seize hidden relationships in knowledge, making patterns extra evident to machine studying fashions.

Strategies:

- Polynomial Options: Helpful for fashions that have to seize non-linear relationships between variables.

- Instance: If a mannequin struggles with a linear relationship, including polynomial phrases like x², x³, or interplay phrases (x1 * x2) can enhance efficiency.

- Use Case: Predicting home costs primarily based on options like sq. footage and variety of rooms. As an alternative of simply utilizing sq. footage, a mannequin may gain advantage from an interplay time period like square_footage * number_of_rooms.

- Binning (Discretization): Converts steady variables into categorical bins to simplify the connection.

- Instance: As an alternative of utilizing uncooked age values (22, 34, 45), we are able to group them into bins:

- Younger (18-30)

- Center-aged (31-50)

- Senior (51+)

- Use Case: Credit score danger modeling, the place totally different age teams have totally different danger ranges.

- Instance: As an alternative of utilizing uncooked age values (22, 34, 45), we are able to group them into bins:

- Ratio Options: Creating ratios between two associated numerical values to normalize the affect of scale.

- Instance: As an alternative of utilizing revenue and mortgage quantity individually, use Revenue-to-Mortgage Ratio = Revenue / Mortgage Quantity to standardize comparisons throughout totally different revenue ranges.

- Use Case: Mortgage default prediction, the place people with a better debt-to-income ratio usually tend to default.

- Time-based Options: Extracts significant insights from timestamps, akin to:

- Hour of the day (helps in visitors evaluation)

- Day of the week (helpful for gross sales forecasting)

- Season (vital for retail and tourism industries)

- Use Case: Predicting e-commerce gross sales by analyzing developments primarily based on weekdays vs. weekends.

Instance:

| Timestamp | Hour | Day of Week | Month | Season |

| 2024-02-15 14:30 | 14 | Thursday | 2 | Winter |

| 2024-06-10 08:15 | 8 | Monday | 6 | Summer season |

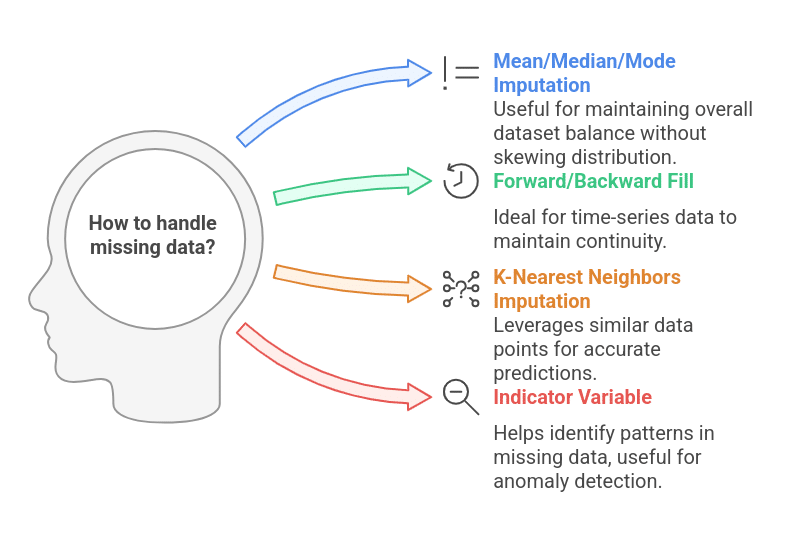

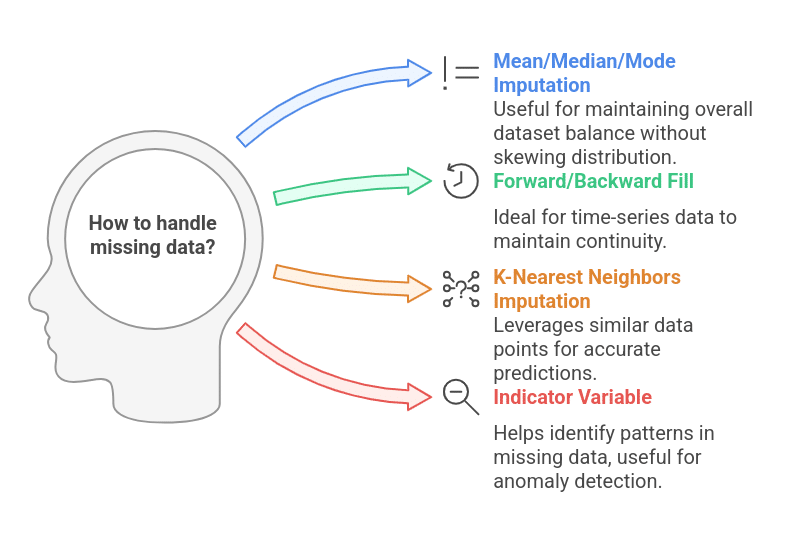

5. Dealing with Lacking Knowledge

Lacking knowledge is frequent in real-world datasets and might negatively affect mannequin efficiency if not dealt with correctly. As an alternative of merely dropping lacking values, function engineering strategies assist retain invaluable data whereas minimizing bias.

Strategies:

- Imply/Median/Mode Imputation:

- Fills lacking values with the imply (for numerical knowledge) or mode (for categorical knowledge).

- Instance: Filling lacking wage values with the median wage of the dataset to forestall skewing the distribution.

- Ahead or Backward Fill (Time-Sequence Knowledge):

- Ahead fill: Makes use of the final identified worth to fill lacking entries.

- Backward fill: Makes use of the following identified worth to fill lacking entries.

- Use Case: Inventory market knowledge the place lacking costs might be stuffed with the day gone by’s costs.

- Ok-Nearest Neighbors (KNN) Imputation:

- Makes use of related knowledge factors to estimate lacking values.

- Instance: If an individual’s revenue is lacking, KNN can predict it primarily based on folks with related job roles, training ranges, and places.

- Indicator Variable for Missingness:

- Creates a binary column (1 = Lacking, 0 = Current) to retain lacking knowledge patterns.

- Use Case: Detecting fraudulent transactions the place lacking values themselves might point out suspicious exercise.

Instance:

| Buyer ID | Age | Wage | Wage Lacking Indicator |

| 101 | 35 | 50,000 | 0 |

| 102 | 42 | NaN | 1 |

| 103 | 29 | 40,000 | 0 |

Characteristic extraction includes deriving new, significant representations from advanced knowledge codecs like textual content, photographs, and time-series. That is particularly helpful in high-dimensional datasets.

Strategies:

- Textual content Options: Converts textual knowledge into numerical kind for machine studying fashions.

- Bag of Phrases (BoW): Represents textual content as phrase frequencies in a matrix.

- TF-IDF (Time period Frequency-Inverse Doc Frequency): Offers significance to phrases primarily based on their frequency in a doc vs. general dataset.

- Phrase Embeddings (Word2Vec, GloVe, BERT): Captures semantic which means of phrases.

- Use Case: Sentiment evaluation of buyer evaluations.

- Picture Options: Extract important patterns from photographs.

- Edge Detection: Identifies object boundaries in photographs (helpful in medical imaging).

- Histogram of Oriented Gradients (HOG): Utilized in object detection.

- CNN-primarily based Characteristic Extraction: Makes use of deep studying fashions like ResNet and VGG for computerized function studying.

- Use Case: Facial recognition, self-driving automotive object detection.

- Time-Sequence Options: Extract significant developments and seasonality from time-series knowledge.

- Rolling Averages: Easy out short-term fluctuations.

- Seasonal Decomposition: Separates pattern, seasonality, and residual parts.

- Autoregressive Options: Makes use of previous values as inputs for predictive fashions.

- Use Case: Forecasting electrical energy demand primarily based on historic consumption patterns.

- Dimensionality Discount (PCA, t-SNE, UMAP):

- PCA (Principal Element Evaluation) reduces high-dimensional knowledge whereas preserving variance.

- t-SNE and UMAP are helpful for visualizing clusters in massive datasets.

- Use Case: Decreasing hundreds of buyer conduct variables into a number of principal parts for clustering.

Instance:

For textual content evaluation, TF-IDF converts uncooked sentences into numerical kind:

| Sentence | “AI is reworking healthcare” | “AI is advancing analysis” |

| AI | 0.4 | 0.3 |

| reworking | 0.6 | 0.0 |

| analysis | 0.0 | 0.7 |

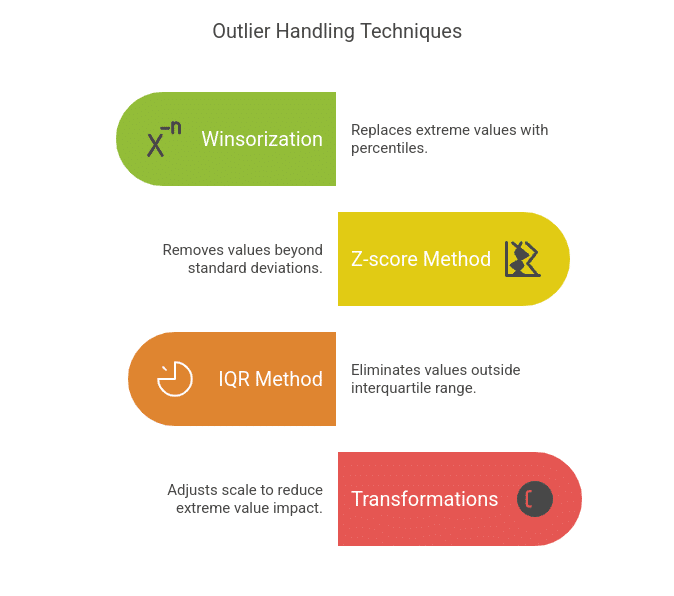

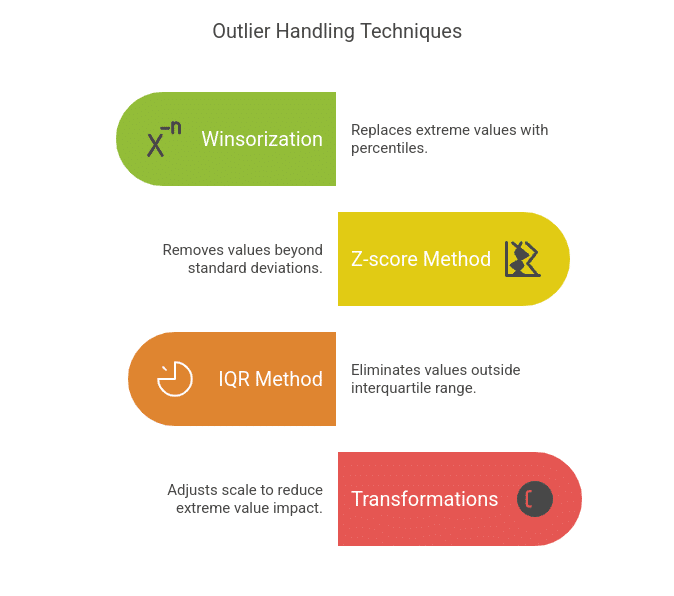

7. Dealing with Outliers

Outliers are excessive values that may distort a mannequin’s predictions. Figuring out and dealing with them correctly prevents skewed outcomes.

Strategies:

- Winsorization: Replaces excessive values with a specified percentile (e.g., capping values on the fifth and ninety fifth percentile).

- Z-score Technique: Removes values which are greater than a sure variety of commonplace deviations from the imply (e.g., ±3σ).

- IQR (Interquartile Vary) Technique: Removes values past 1.5 instances the interquartile vary (Q1 and Q3).

- Transformations (Log, Sq. Root): Reduces the affect of maximum values by adjusting scale.

Instance:

Detecting outliers in a wage dataset utilizing IQR:

| Worker | Wage | Outlier (IQR Technique) |

| A | 50,000 | No |

| B | 52,000 | No |

| C | 200,000 | Sure |

8. Characteristic Interplay

Characteristic interplay helps seize relationships between variables that aren’t apparent of their uncooked kind.

Strategies:

- Multiplication or Division of Options:

- Instance: As an alternative of utilizing “Weight” and “Peak” individually, create BMI = Weight / Height².

- Polynomial Options:

- Instance: Including squared or cubic phrases for higher non-linear modelling.

- Clustering-Primarily based Options:

- Assign cluster labels utilizing k-means, which can be utilized as categorical inputs.

Instance:

Creating an “Engagement Rating” for a consumer primarily based on:

Engagement Rating = (Logins * Time Spent) / (1 + Bounce Charge).

By leveraging these function engineering strategies, you may rework uncooked knowledge into highly effective predictive inputs, finally enhancing mannequin accuracy, effectivity, and interoperability.

Comparability: Good vs. Dangerous Characteristic Engineering

Knowledge Preprocessing

Good Characteristic Engineering

Handles lacking values, removes outliers, and applies correct scaling.

Dangerous Characteristic Engineering

Ignores lacking values, contains outliers, and fails to standardize.

Characteristic Choice

Good Characteristic Engineering

Makes use of correlation evaluation, significance scores, and area experience to choose options.

Dangerous Characteristic Engineering

Makes use of all options, even when some are redundant or irrelevant.

Characteristic Transformation

Good Characteristic Engineering

Normalizes, scales, and applies log transformations when essential.

Dangerous Characteristic Engineering

Makes use of uncooked knowledge with out processing, resulting in inconsistent mannequin conduct.

Encoding Categorical Knowledge

Good Characteristic Engineering

Makes use of correct encoding strategies like one-hot, goal, or frequency encoding.

Dangerous Characteristic Engineering

Assigns arbitrary numeric values to classes, deceptive the mannequin.

Characteristic Creation

Good Characteristic Engineering

Introduces significant new options (e.g., ratios, interactions, polynomial phrases).

Dangerous Characteristic Engineering

Provides random variables or duplicates current options.

Dealing with Time-based Knowledge

Good Characteristic Engineering

Extracts helpful patterns (e.g., day of the week, pattern indicators).

Dangerous Characteristic Engineering

Leaves timestamps in uncooked format, making patterns more durable to study.

Mannequin Efficiency

Good Characteristic Engineering

Larger accuracy, generalizes nicely on new knowledge, interpretable outcomes.

Dangerous Characteristic Engineering

Poor accuracy, overfits coaching knowledge, fails in real-world situations.

How Does Good and Dangerous Characteristic Engineering Have an effect on Mannequin Efficiency?

Instance 1: Predicting Home Costs

- Good Characteristic Engineering: As an alternative of utilizing the uncooked “Yr Constructed” column, create a brand new function: “Home Age” (Present Yr – Yr Constructed). This gives a clearer relationship with value.

- Dangerous Characteristic Engineering: Conserving the “Yr Constructed” column as-is, forcing the mannequin to study advanced patterns as a substitute of specializing in an easy numerical relationship.

Instance 2: Credit score Card Fraud Detection

- Good Characteristic Engineering: Creating a brand new function “Variety of Transactions within the Final Hour” helps establish suspicious exercise.

- Dangerous Characteristic Engineering: Utilizing uncooked timestamps with none transformation, making it tough for the mannequin to detect time-based anomalies.

Instance 3: Buyer Churn Prediction

- Good Characteristic Engineering: Combining buyer interplay knowledge right into a “Month-to-month Exercise Rating” (e.g., logins, purchases, help queries).

- Dangerous Characteristic Engineering: Utilizing every interplay kind individually, making the dataset unnecessarily advanced and more durable for the mannequin to interpret.

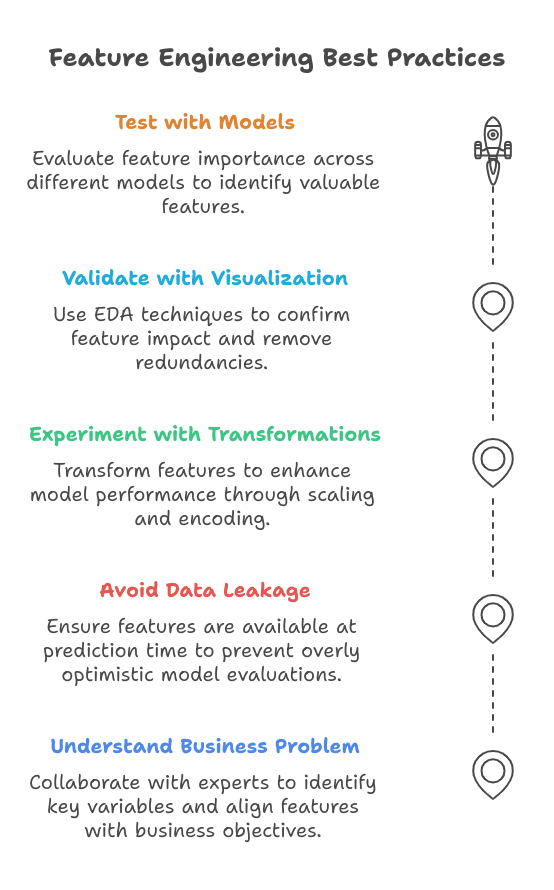

Greatest Practices in Characteristic Engineering

1. Understanding the Enterprise Drawback Earlier than Choosing Options

Earlier than making use of function engineering strategies, it’s essential to know the particular drawback the mannequin goals to unravel. Choosing options with out contemplating the area context can result in irrelevant or deceptive inputs, decreasing mannequin effectiveness.

Greatest Practices:

- Collaborate with area consultants to establish key variables.

- Analyze historic developments and real-world constraints.

- Guarantee chosen options align with enterprise goals.

Instance:

For a mortgage default prediction mannequin, options like credit score rating, revenue stability, and previous mortgage compensation conduct are extra invaluable than generic options like ZIP code.

2. Avoiding Knowledge Leakage Via Cautious Characteristic Choice

Knowledge leakage happens when data from the coaching set is inadvertently included within the take a look at set, resulting in overly optimistic efficiency that doesn’t generalize nicely to real-world situations.

Greatest Practices:

- Exclude options that wouldn’t be obtainable on the time of prediction.

- Keep away from utilizing future data in coaching knowledge.

- Be cautious with derived options primarily based on course variables.

Instance of Knowledge Leakage:

Utilizing “complete purchases within the subsequent 3 months” as a function to foretell buyer churn. Since this data wouldn’t be obtainable on the prediction time, it will result in incorrect mannequin analysis.

Repair: Use previous buy conduct (e.g., “variety of purchases within the final 6 months”) as a substitute.

3. Experimenting with Totally different Transformations and Encodings

Reworking options into extra appropriate codecs can considerably improve mannequin efficiency. This contains scaling numerical variables, encoding categorical variables, and making use of mathematical transformations.

Greatest Practices:

- Scaling numerical variables (Min-Max Scaling, Standardization) to make sure consistency.

- Encoding categorical variables (One-Sizzling, Label Encoding, Goal Encoding) primarily based on knowledge distribution.

- Making use of transformations (Log, Sq. Root, Energy Remodel) to normalize skewed knowledge.

Instance:

For a dataset with extremely skewed revenue knowledge:

- Uncooked Revenue Knowledge: [10,000, 20,000, 50,000, 1,000,000] (skewed distribution)

- Log Transformation: [4, 4.3, 4.7, 6] (reduces skewness, enhancing mannequin efficiency).

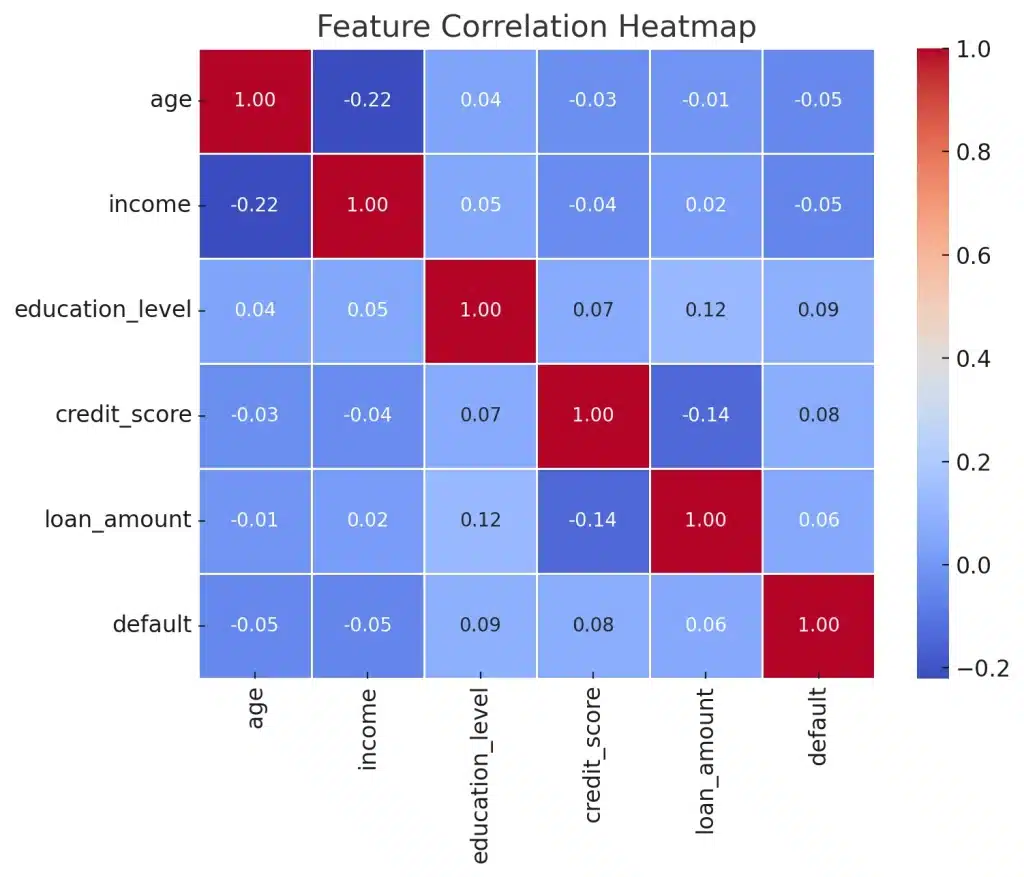

4. Validating Engineered Options Utilizing Visualization and Correlation Evaluation

Earlier than finalizing options, it’s important to validate their affect utilizing exploratory knowledge evaluation (EDA) strategies.

Greatest Practices:

- Use histograms and field plots to verify function distributions.

- Use scatter plots and correlation heatmaps to establish relationships between variables.

- Take away extremely correlated options to forestall multicollinearity.

Instance:

In a gross sales prediction mannequin, if “advertising spend” and “advert finances” have a correlation > 0.9, preserving each may introduce redundancy. As an alternative, use one or create a derived function like “advertising effectivity = income/advert spend”.

5. Testing Options with Totally different Fashions to Consider Impression

Characteristic significance varies throughout totally different algorithms. A function that improves efficiency in a single mannequin won’t be helpful in one other.

Greatest Practices:

- Practice a number of fashions (Linear Regression, Determination Timber, Neural Networks) and examine function significance.

- Use Permutation Significance or SHAP values to know every function’s contribution.

- Carry out Ablation Research (eradicating one function at a time) to measure efficiency affect.

Instance:

In a buyer churn mannequin:

- Determination bushes may prioritize buyer complaints and contract varieties.

- Logistic regression may discover tenure and month-to-month invoice quantities extra vital.

By testing totally different fashions, you may establish essentially the most invaluable options.

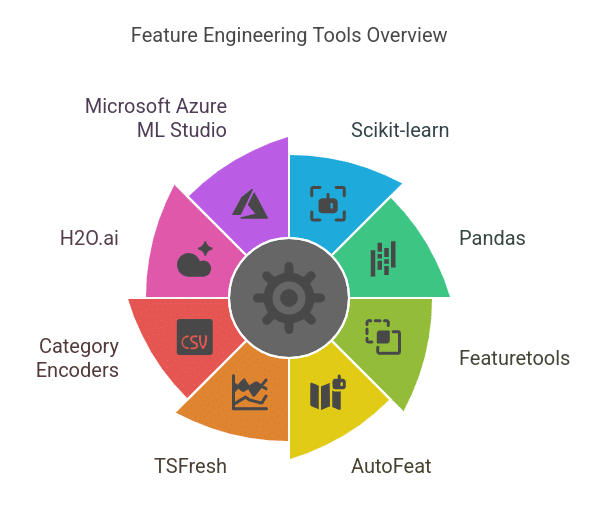

1. Python Libraries for Characteristic Engineering

Python is the go-to language for function engineering as a result of its sturdy libraries:

- Pandas – For knowledge manipulation, function extraction, and dealing with lacking values.

- NumPy – For mathematical operations and transformations on numerical knowledge.

- Scikit-learn – For preprocessing strategies like scaling, encoding, and have choice.

- Characteristic-engine – A specialised library with transformers for dealing with outliers, imputation, and categorical encoding.

- Scipy – Helpful for statistical transformations, like polynomial function era and energy transformations.

Additionally Learn: Checklist of Python Libraries for Knowledge Science and Evaluation

2. Automated Characteristic Engineering Instruments

- Featuretools – Automates the creation of recent options utilizing deep function synthesis (DFS).

- tsfresh – Extracts significant time-series options like pattern, seasonality, and entropy.

- AutoFeat – Automates function extraction and choice utilizing AI-driven strategies.

- H2O.ai AutoML – Performs computerized function transformation and choice.

3. Large Knowledge Instruments for Characteristic Engineering

- Spark MLlib (Apache Spark) – Handles large-scale knowledge transformations and have extraction in distributed environments.

- Dask – Parallel processing for scaling function engineering on massive datasets.

- Feast (Characteristic Retailer by Tecton) – Manages and serves options effectively for machine studying fashions in manufacturing.

4. Characteristic Choice and Significance Instruments

- SHAP (Shapley Additive Explanations) – Measures function significance and affect on predictions.

- LIME (Native Interpretable Mannequin-agnostic Explanations) – Helps interpret particular person predictions and assess function relevance.

- Boruta – A wrapper technique for choosing a very powerful options utilizing a random forest algorithm.

5. Visualization Instruments for Characteristic Engineering

- Matplotlib & Seaborn – For exploring function distributions, correlations, and developments.

- Plotly – For interactive function evaluation and sample detection.

- Yellowbrick – Supplies visible diagnostics for function choice and mannequin efficiency.

Conclusion

Mastering function engineering is important for constructing high-performance machine studying fashions, and the proper instruments can considerably streamline the method.

Leveraging these instruments, from knowledge preprocessing with Pandas and Scikit-learn to automated function extraction with Featuretools and SHAP for interpretability, can improve mannequin accuracy and effectivity.

To realize hands-on experience in function engineering and machine studying, take this free function engineering course and elevate your profession at the moment!

Enroll in our MIT Knowledge Science and Machine Studying Course to realize full experience in such knowledge science and machine studying subjects.