In graph evaluation, the necessity for labeled information presents a major hurdle for conventional supervised studying strategies, significantly inside tutorial, social, and organic networks. To beat this limitation, Graph Self-supervised Pre-training (GSP) methods have emerged, leveraging the intrinsic buildings and properties of graph information to extract significant representations with out the necessity for labeled examples. GSP strategies are broadly categorized into two classes: contrastive and generative.

Contrastive strategies, like GraphCL and SimGRACE, create a number of graph views by way of augmentation and study representations by contrasting optimistic and damaging samples. Generative strategies like GraphMAE and MaskGAE concentrate on studying node representations through a reconstruction goal. Notably, generative GSP approaches are sometimes easier and more practical than their contrastive counterparts, which depend on meticulously designed augmentation and sampling methods.

Present Generative graph-masked AutoEncoder (GMAE) fashions primarily consider reconstructing node options, thereby capturing predominantly node-level info. This single-scale method, nevertheless, wants to handle the multi-scale nature inherent in lots of graphs, akin to social networks, advice methods, and molecular buildings. These graphs include node-level particulars and subgraph-level info, exemplified by purposeful teams in molecular graphs. The shortcoming of present GMAE fashions to successfully study this advanced, higher-level structural info ends in diminished efficiency.

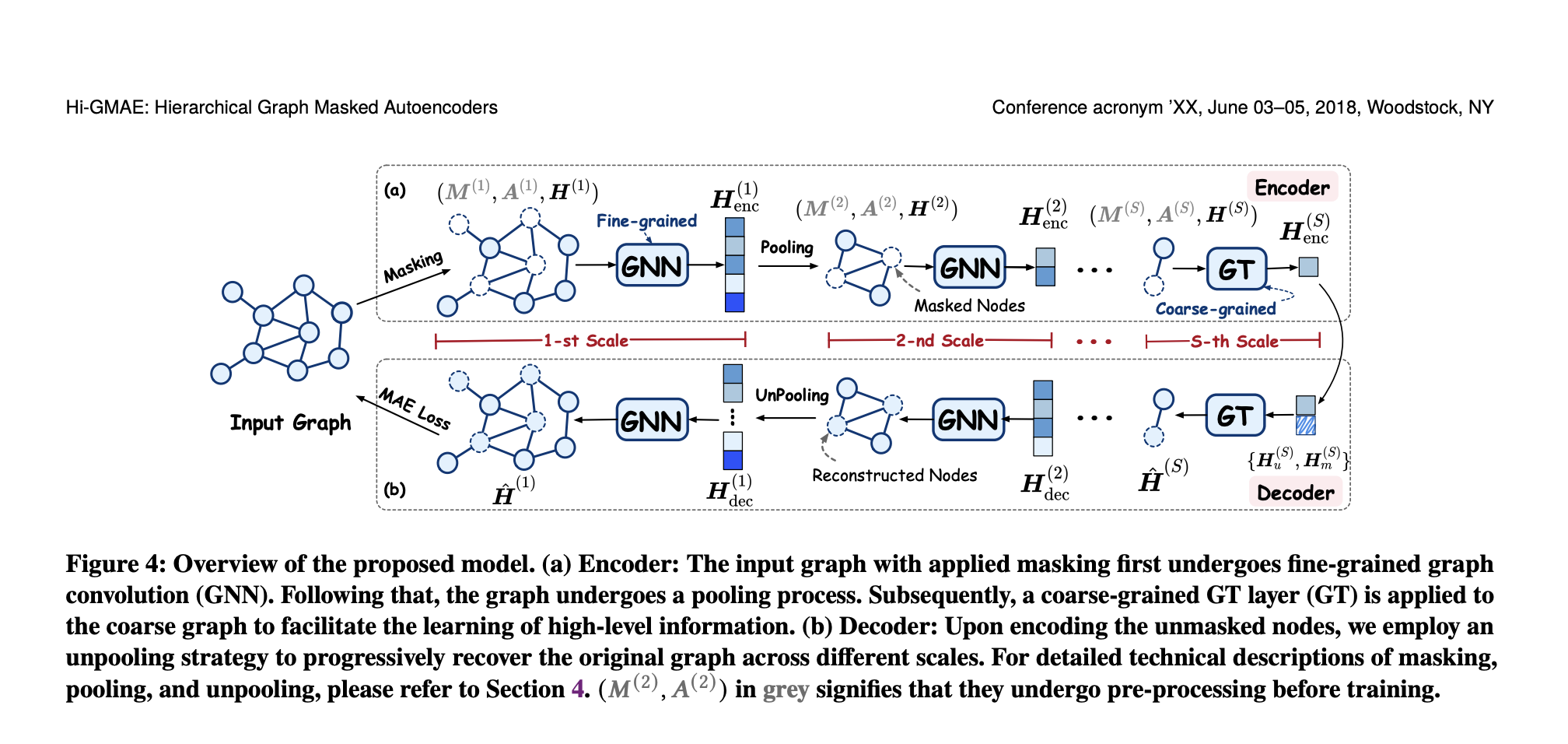

To handle these limitations, a staff of researchers from varied establishments, together with Wuhan College, launched the Hierarchical Graph Masked AutoEncoders (Hello-GMAE) framework. Hello-GMAE includes three essential elements designed to seize hierarchical info in graphs. The primary element, multi-scale coarsening, constructs coarse graphs at a number of scales utilizing graph pooling strategies that cluster nodes into super-nodes progressively.

The second element, Coarse-to-Tremendous (CoFi) masking with restoration, introduces a novel masking technique that ensures the consistency of masked subgraphs throughout all scales. This technique begins with random masking of the coarsest graph, adopted by back-projecting the masks to finer scales utilizing an unspooling operation. A gradual restoration course of selectively unmasks sure nodes to help studying from initially totally masked subgraphs.

The third key element of Hello-GMAE is the Tremendous- and Coarse-Grained (Fi-Co) encoder and decoder. The hierarchical encoder integrates fine-grained graph convolution modules to seize native info at decrease graph scales and coarse-grained graph transformer (GT) modules to concentrate on international info at greater graph scales. The corresponding light-weight decoder regularly reconstructs and tasks the realized representations to the unique graph scale, making certain complete seize and illustration of multi-level structural info.

To validate the effectiveness of Hello-GMAE, in depth experiments have been carried out on varied widely-used datasets, encompassing unsupervised and switch studying duties. The experimental outcomes demonstrated that Hello-GMAE outperforms current state-of-the-art fashions in contrastive and generative pre-training domains. These findings underscore some great benefits of the multi-scale GMAE method over conventional single-scale fashions, highlighting its superior functionality in capturing and leveraging hierarchical graph info.

In conclusion, Hello-GMAE represents a major development in self-supervised graph pre-training. By integrating multi-scale coarsening, an modern masking technique, and a hierarchical encoder-decoder structure, Hello-GMAE successfully captures the complexities of graph buildings at varied ranges. The framework’s superior efficiency in experimental evaluations solidifies its potential as a robust software for graph studying duties, setting a brand new benchmark in graph evaluation.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t overlook to comply with us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

Should you like our work, you’ll love our publication..

Don’t Overlook to affix our 43k+ ML SubReddit | Additionally, take a look at our AI Occasions Platform

Arshad is an intern at MarktechPost. He’s presently pursuing his Int. MSc Physics from the Indian Institute of Know-how Kharagpur. Understanding issues to the basic stage results in new discoveries which result in development in expertise. He’s enthusiastic about understanding the character essentially with the assistance of instruments like mathematical fashions, ML fashions and AI.