Machine Studying (ML) is a subset of synthetic intelligence (AI) that facilities on creating algorithms to study from knowledge and make predictions or choices with no need detailed programming for every job. Fairly than adhering to strict pointers, ML fashions acknowledge patterns and improve their effectiveness as time progresses.

Greedy these phrases and their associated algorithms is essential for using machine studying successfully in various fields, spanning healthcare and finance to automation and synthetic intelligence functions.

On this article, we are going to discover totally different sorts of Machine Studying algorithms, how they perform, and their functions in the true world to deepen your comprehension of their significance and sensible implementation.

What’s a Machine Studying Algorithm?

A machine studying algorithm consists of guidelines or mathematical fashions that permit computer systems to acknowledge patterns in knowledge and generate predictions or choices with out direct programming. These algorithms analyze enter knowledge, acknowledge connections, and improve effectivity as time progresses.

How They Work:

- Practice on a dataset to acknowledge patterns.

- Take a look at on new knowledge to guage efficiency.

- Optimize by adjusting parameters to enhance accuracy.

Machine studying algorithms drive functions comparable to advice programs, fraud detection, and autonomous automobiles.

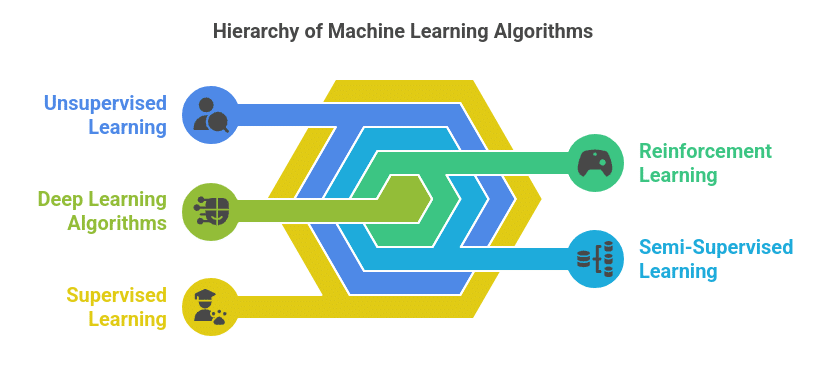

Varieties of Machine Studying Algorithms

Machine Studying algorithms might be categorized into 5 varieties:

- Supervised Studying

- Unsupervised Studying

- Reinforcement Studying

- Semi-Supervised Studying

- Deep Studying Algorithms

1. Supervised Studying

In supervised studying, the mannequin is educated utilizing a labeled dataset, indicating that each coaching instance accommodates an input-output pair. This algorithm learns to affiliate inputs with the suitable outputs primarily based on historic knowledge.

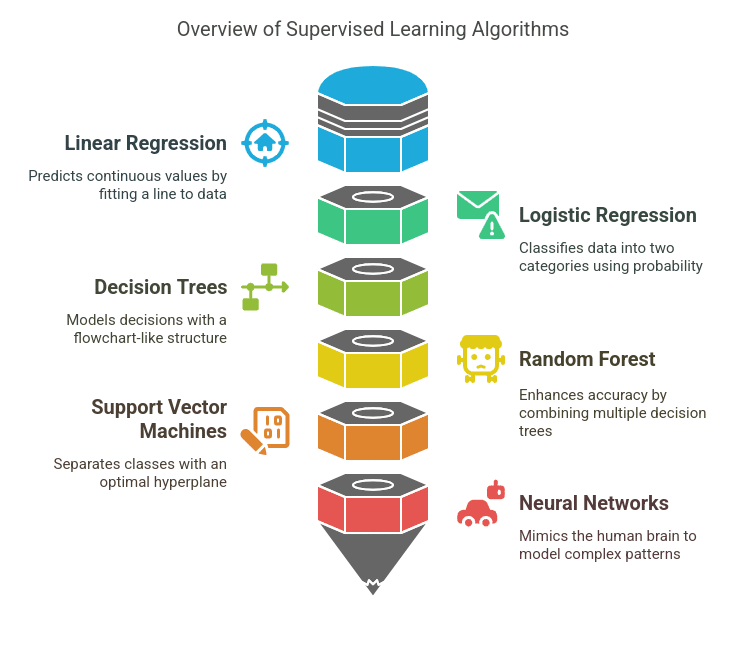

Frequent Supervised Studying Algorithms:

A. Linear Regression

Linear Regression is a basic algorithm used to foretell steady numerical values primarily based on enter options. It really works by becoming a straight line (y=mx+b) to the information that greatest represents the connection between the unbiased and dependent variables.

- Instance: Estimating dwelling values contemplating parts comparable to space, bed room depend, and geographic place.

- Key Idea: It reduces the discrepancy between noticed and estimated values by using the least squares approach

B. Logistic Regression

Although its title suggests in any other case, Logistic Regression is employed for classification as a substitute of regression. It makes use of the sigmoid perform to remodel predicted values into a variety of 0 to 1, which makes it appropriate for binary classification points.

- Instance: Figuring out whether or not an electronic mail is spam or not primarily based on the presence of particular key phrases.

- Key Idea: Makes use of chance to categorise knowledge factors into two classes and applies a threshold (e.g., 0.5) to make choices.

C. Resolution Timber

A Resolution Tree is a mannequin resembling a flowchart, with every node symbolizing a characteristic, each department indicating a choice, and every leaf denoting an consequence. It’s able to managing each classification and regression duties.

- Instance: A financial institution decides whether or not to approve a mortgage primarily based on revenue, credit score rating, and employment historical past.

- Key Idea: Splits knowledge primarily based on characteristic situations to maximise data achieve utilizing metrics like Gini Impurity or Entropy.

D. Random Forest

Random Forest is an ensemble studying approach that creates a number of determination bushes and merges their outcomes to boost accuracy and reduce overfitting.

- Instance: Predicting whether or not a buyer will churn primarily based on transaction historical past, demographics, and interactions with customer support.

- Key Idea: Makes use of bootstrap aggregating (bagging) to generate various bushes and averages their predictions for stability.

E. Assist Vector Machines (SVM)

SVM is a strong classification algorithm that finds the optimum hyperplane to separate totally different lessons. It’s significantly helpful for datasets with clear margins between classes.

- Instance: Classifying handwritten digits within the MNIST dataset.

- Key Idea: Makes use of kernel capabilities (linear, polynomial, RBF) to map knowledge into larger dimensions for higher separation.

F. Neural Networks

Neural Networks mimic the human mind, consisting of a number of layers of interconnected neurons that study from knowledge. They’re broadly used for deep studying functions.

- Instance: Picture recognition in self-driving automobiles to detect pedestrians, visitors indicators, and different automobiles.

Purposes of Supervised Studying:

- Electronic mail Spam Filtering

- Medical Analysis

- Buyer Churn Prediction

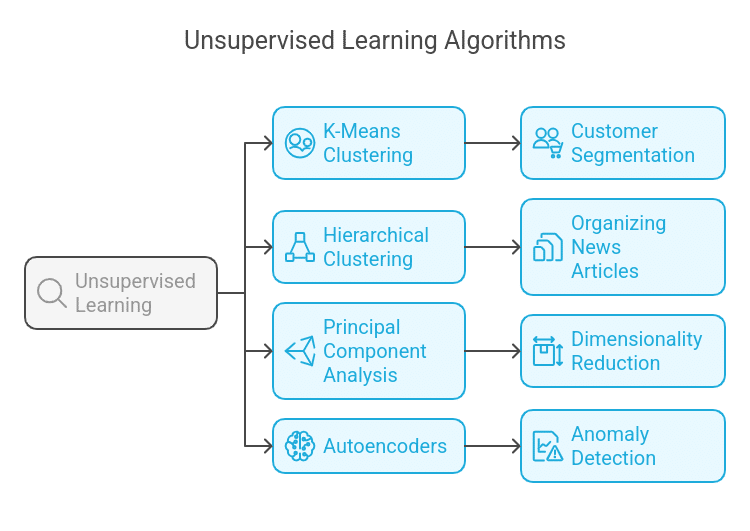

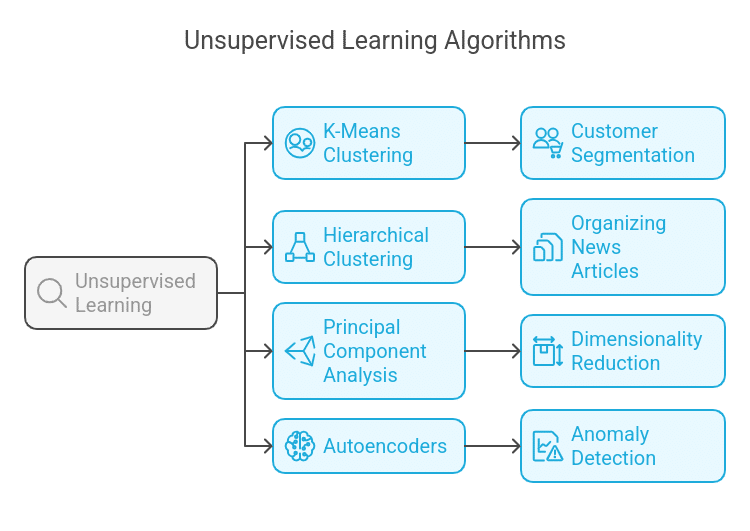

2. Unsupervised Studying

Unsupervised studying offers with knowledge that doesn’t have labeled responses. The algorithm finds hidden patterns and buildings within the dataset.

Frequent Unsupervised Studying Algorithms:

A. Ok-Means Clustering

Ok-Means is a well-liked clustering algorithm that teams comparable knowledge factors into Ok clusters. It assigns every level to the closest cluster centroid and updates centroids iteratively to reduce variance inside clusters.

- Instance: Buyer segmentation in e-commerce, the place customers are grouped primarily based on their buying conduct.

- Key Idea: Makes use of the Euclidean distance to assign knowledge factors to clusters and updates centroids till convergence.

B. Hierarchical Clustering

Hierarchical Clustering builds a hierarchy of clusters utilizing both Agglomerative (bottom-up) or Divisive (top-down) approaches. It creates a dendrogram to visualise relationships between clusters.

- Instance: Organizing information articles into topic-based teams with out predefined classes.

- Key Idea: Makes use of distance metrics (e.g., single-linkage, complete-linkage) to merge or break up clusters.

C. Principal Part Evaluation (PCA)

PCA is a technique for lowering dimensionality that converts high-dimensional knowledge right into a lower-dimensional house whereas sustaining key data. It finds the principal parts, that are the instructions of most variance.

- Instance: Decreasing the variety of options in a picture dataset whereas retaining essential patterns for machine studying fashions.

- Key Idea: Makes use of eigenvectors and eigenvalues to challenge knowledge onto fewer dimensions whereas minimizing data loss.

D. Autoencoders

Autoencoders are a kind of neural community used for characteristic studying, compression, and anomaly detection. They encompass an encoder (compressing enter knowledge) and a decoder (reconstructing the unique knowledge).

- Instance: Detecting fraudulent transactions by figuring out uncommon patterns in monetary knowledge.

- Key Idea: Makes use of a bottleneck layer to seize necessary options and reconstructs knowledge utilizing imply squared error (MSE) loss.

Purposes of Unsupervised Studying:

- Buyer Segmentation

- Anomaly Detection in Fraud Detection

- Recommender Techniques (e.g., Netflix, Amazon)

Perceive the important thing variations between Supervised and Unsupervised Studying and the way they impression machine studying fashions.

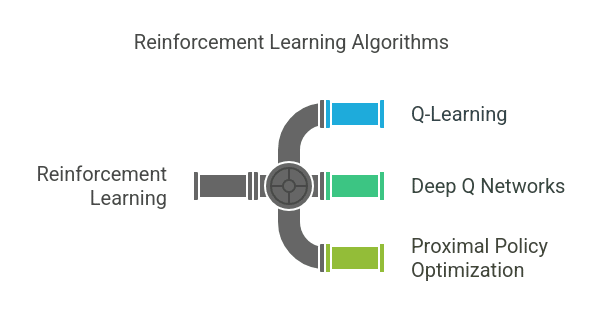

3. Reinforcement Studying

Reinforcement studying (RL) entails an agent studying to work together with an surroundings to maximise the entire sum of rewards over time.

Key Ideas in Reinforcement Studying:

- Agent – The entity that takes actions.

- Atmosphere – The world through which the agent operates.

- Actions – Selections the agent could make.

- Rewards – Suggestions indicators guiding the agent.

Frequent Reinforcement Studying Algorithms:

A. Q-Studying

Q-learning is a reinforcement studying algorithm with out a mannequin that develops a perfect action-selection coverage via a Q-table. It follows the Bellman equation to replace the Q-values primarily based on rewards obtained from the surroundings.

- Instance: Coaching an AI agent to play a easy recreation like Tic-Tac-Toe by studying which strikes result in victory over time.

- Key Idea: Makes use of the ε-greedy coverage to stability exploration (making an attempt new actions) and exploitation (selecting the best-known motion).

B. Deep Q Networks (DQN)

DQN is an extension of Q-Studying that leverages deep neural networks to approximate the Q-values, making it appropriate for high-dimensional environments the place sustaining a Q-table is impractical.

- Instance: Educating an AI to play Atari video games, like Breakout, the place uncooked pixel knowledge is used as enter.

- Key Idea: Makes use of expertise replay (storing previous experiences) and a goal community (stabilizing coaching) to enhance studying.

C. Proximal Coverage Optimization (PPO)

PPO is a policy-based reinforcement studying algorithm that optimizes actions utilizing a belief area strategy, making certain secure updates and stopping giant, destabilizing coverage adjustments.

- Instance: Coaching robotic arms to know objects effectively or enabling recreation AI to strategize in advanced environments.

- Key Idea: Makes use of clipped goal capabilities to forestall overly aggressive updates and enhance coaching stability.

Purposes of Reinforcement Studying:

- Recreation Enjoying (e.g., AlphaGo, OpenAI Health club)

- Robotics Automation

- Autonomous Automobiles

Perceive the basics of Reinforcement Studying and get it to make choices in AI and robotics.

4. Semi-Supervised Studying

Semi-supervised studying falls between supervised and unsupervised studying, the place solely a small portion of the dataset is labeled, and the remaining is unlabeled.

Purposes:

- Speech Recognition

- Textual content Classification

- Medical Picture Evaluation

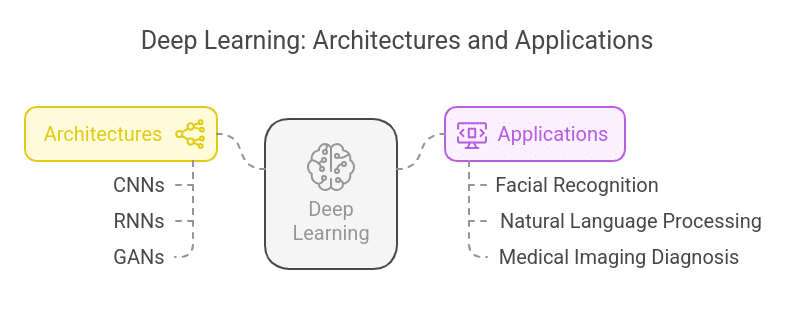

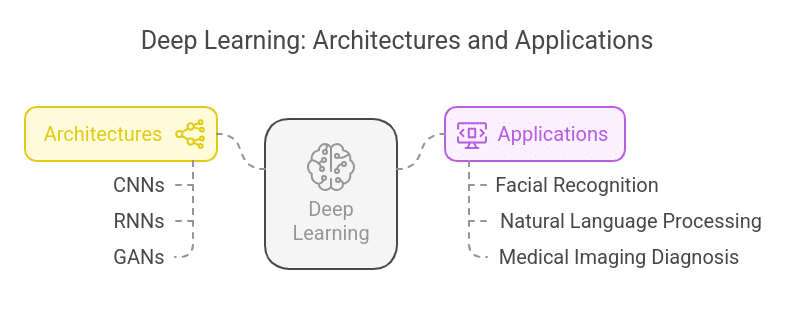

5. Deep Studying Algorithms

Deep Studying is a website of Machine Studying that comes with neural networks with a number of layers (i.e., deep networks) to find refined attributes of uncooked knowledge.

Widespread Deep Studying Architectures:

Purposes:

Grasp Machine Studying with Python on this free course. Study key ML ideas, algorithms, and hands-on implementation from business specialists.

Selecting the Proper Machine Studying Algorithm

Deciding on the suitable machine studying algorithm will depend on varied components, together with the character of the information, the issue kind, and computational effectivity.

Listed here are key concerns for selecting the right algorithm:

- Sort of Knowledge: Structured and Unstructured

- Downside Sort: Classification, Regression, Clustering, or Anomaly Detection

- Accuracy vs. Interpretability: Resolution bushes are simple to interpret, whereas deep studying fashions are extra correct however extra advanced to grasp.

- Computational Energy: Some fashions require excessive computational assets (e.g., deep studying).

Experimentation and mannequin analysis utilizing methods like cross-validation and hyperparameter tuning are essential in deciding on the best-performing algorithm.

Uncover the most recent Synthetic Intelligence and Machine Studying Tendencies shaping the way forward for AI.

Pattern Codes of ML Algorithms in Python

Linear Regression in Python

from sklearn.linear_model import LinearRegression

import numpy as np

# Pattern Knowledge

X = np.array([1, 2, 3, 4, 5]).reshape(-1, 1)

y = np.array([2, 4, 6, 8, 10])

# Mannequin Coaching

mannequin = LinearRegression()

mannequin.match(X, y)

# Prediction

print(mannequin.predict([[6]])) # Output: Approx 12

Logistic Regression in Python

from sklearn.linear_model import LogisticRegression

import numpy as np

# Pattern Knowledge

X = np.array([[1], [2], [3], [4], [5]])

y = np.array([0, 0, 1, 1, 1]) # Binary classification

# Mannequin Coaching

mannequin = LogisticRegression()

mannequin.match(X, y)

# Prediction

print(mannequin.predict([[2.5]])) # Output: 0 or 1 primarily based on discovered sample

Ok-Means Clustering in Python

from sklearn.cluster import KMeans

import numpy as np

# Pattern Knowledge

X = np.array([[1, 2], [2, 3], [3, 4], [8, 9], [9, 10]])

# Ok-Means Mannequin

kmeans = KMeans(n_clusters=2, random_state=0)

kmeans.match(X)

# Output cluster labels

print(kmeans.labels_) # Labels assigned to every knowledge level

Resolution Tree Classifier in Python

from sklearn.tree import DecisionTreeClassifier

import numpy as np

# Pattern Knowledge

X = np.array([[1], [2], [3], [4], [5]])

y = np.array([0, 0, 1, 1, 1]) # Binary classification

# Mannequin Coaching

mannequin = DecisionTreeClassifier()

mannequin.match(X, y)

# Prediction

print(mannequin.predict([[3.5]])) # Anticipated Output: 1

Assist Vector Machine (SVM) in Python

from sklearn.svm import SVC

import numpy as np

# Pattern Knowledge

X = np.array([[1], [2], [3], [4], [5]])

y = np.array([0, 0, 1, 1, 1]) # Binary classification

# Mannequin Coaching

mannequin = SVC(kernel="linear")

mannequin.match(X, y)

# Prediction

print(mannequin.predict([[2.5]])) # Output: 0 or 1

Conclusion

Machine studying algorithms are a algorithm that assist AI programs study and carry out duties. They’re used to find patterns in knowledge or to foretell outcomes from enter knowledge.

These algorithms are the spine of AI-driven options. Figuring out these algorithms empowers you to leverage them extra successfully.

Desire a profession in AI & ML?

Our PG Program in AI & Machine Studying builds experience in NLP, GenAI, neural networks, and deep studying. Earn certificates and begin your AI journey in the present day!

Ceaselessly Requested Questions

1. What’s the bias-variance tradeoff in machine studying?

The bias-variance tradeoff is a basic idea in ML that balances two sources of error:

- Excessive Bias (Underfitting): The mannequin is just too easy and fails to seize knowledge patterns.

- Excessive Variance (Overfitting): The mannequin is just too advanced and suits noise within the coaching knowledge.

- An optimum mannequin finds a stability between bias and variance to generalize nicely to new knowledge.

2. What’s the distinction between parametric and non-parametric algorithms?

- Parametric algorithms assume mounted parameters (e.g., Linear Regression, Logistic Regression). They’re sooner however could not seize advanced relationships.

- Non-parametric algorithms don’t assume a set construction and adapt primarily based on knowledge (e.g., Resolution Timber, Ok-nearest neighbors). They’re extra versatile however require extra knowledge to carry out nicely.

3. What are ensemble studying strategies?

Ensemble studying combines a number of fashions to enhance accuracy and scale back errors. Frequent methods embrace:

- Bagging (e.g., Random Forest) – Combines a number of determination bushes for higher stability.

- Boosting (e.g., XGBoost, AdaBoost) – Sequentially improves weak fashions by specializing in advanced instances.

- Stacking – Combines totally different fashions and makes use of one other mannequin to mixture outcomes.

Understanding the Ensemble Technique Bagging and Boosting

4. What’s characteristic choice, and why is it necessary?

Function choice is the method of selecting probably the most related enter variables for a machine studying mannequin to enhance accuracy and scale back complexity. Methods embrace:

- Filter Strategies (e.g., correlation, mutual data)

- Wrapper Strategies (e.g., Recursive Function Elimination)

- Embedded Strategies (e.g., Lasso Regression)

5. What’s switch studying in machine studying?

Switch studying entails utilizing a pre-trained mannequin on a brand new job with minimal retraining. It’s generally utilized in deep studying for NLP (e.g., BERT, GPT) and laptop imaginative and prescient (e.g., ResNet, VGG). It permits leveraging information from giant datasets with out coaching from scratch.